On the Difficulty of Training Recurrent Neural Networks - Razvan Pascanu, Tomas Mikolov, Yoshua Bengio

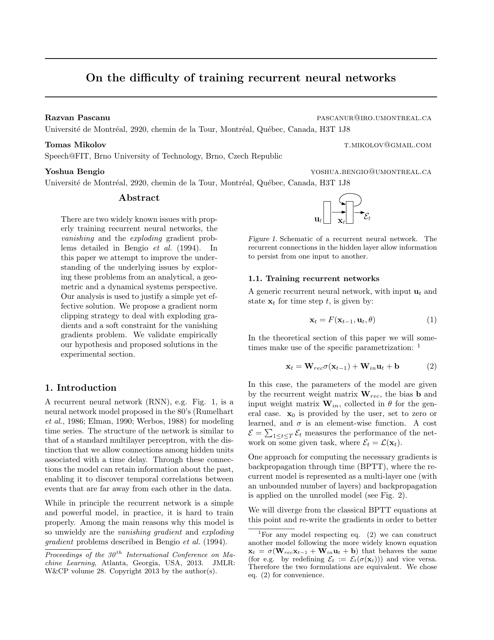

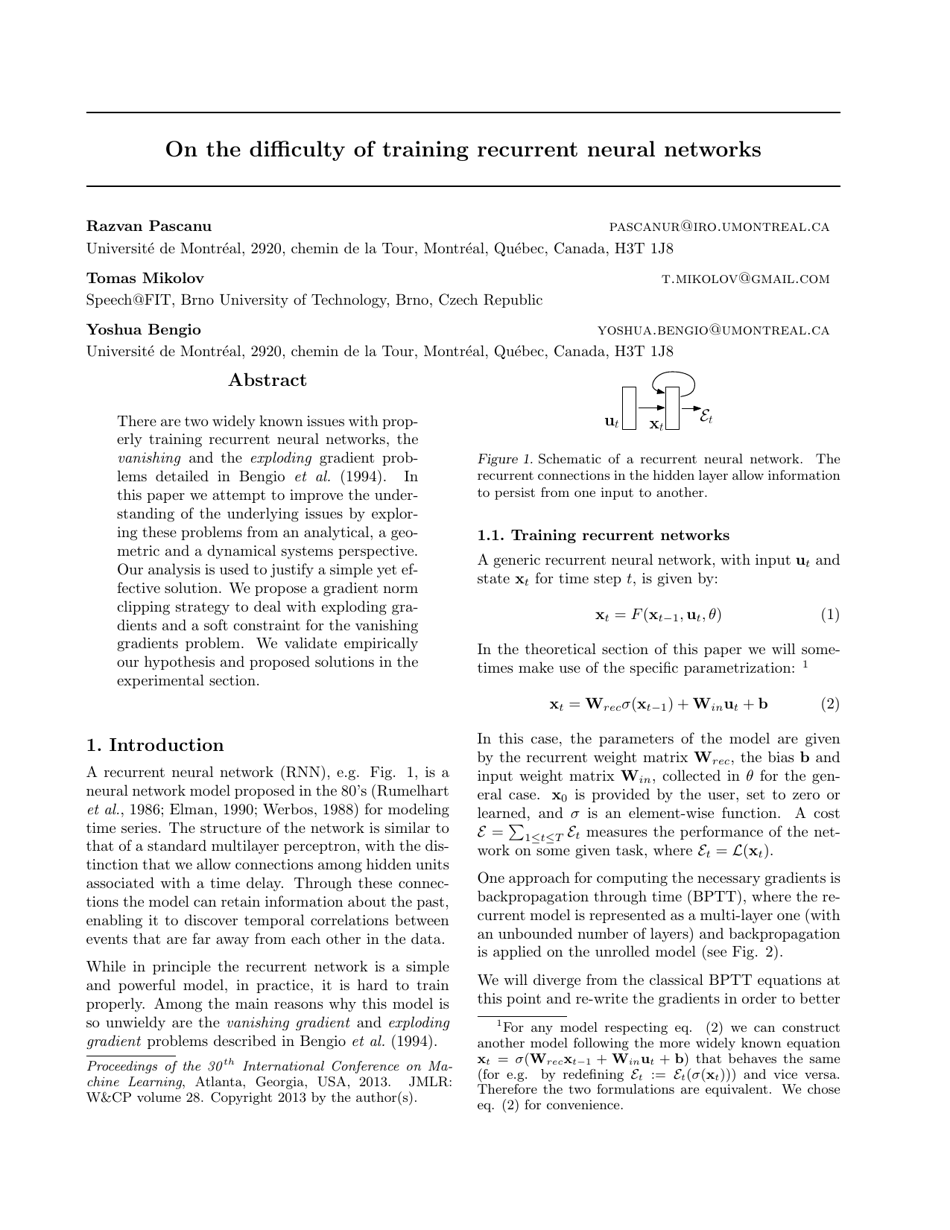

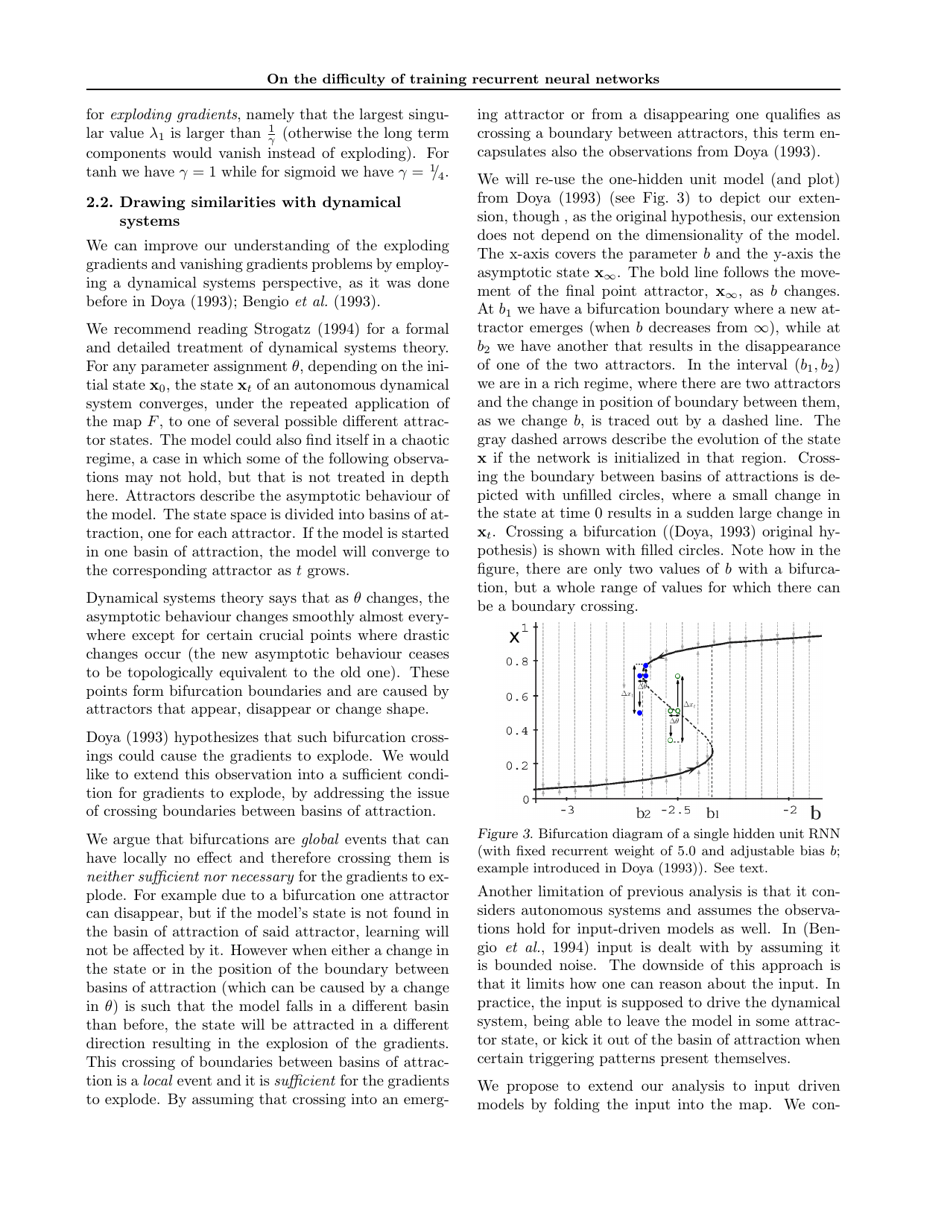

"On the Difficulty of Training Recurrent Neural Networks" is a research paper written by Razvan Pascanu, Tomas Mikolov, and Yoshua Bengio. It explores the challenges and issues that arise when training recurrent neural networks, which are a type of artificial intelligence model. The paper aims to shed light on the difficulties and propose solutions to improve the training process of these networks.

FAQ

Q: Who are the authors of the document?

A: Razvan Pascanu, Tomas Mikolov, Yoshua Bengio

Q: What is the title of the document?

A: On the Difficulty of Training Recurrent Neural Networks

Q: What is the topic of the document?

A: Training recurrent neural networks

Q: Who is the intended audience for this document?

A: Researchers and developers interested in neural networks

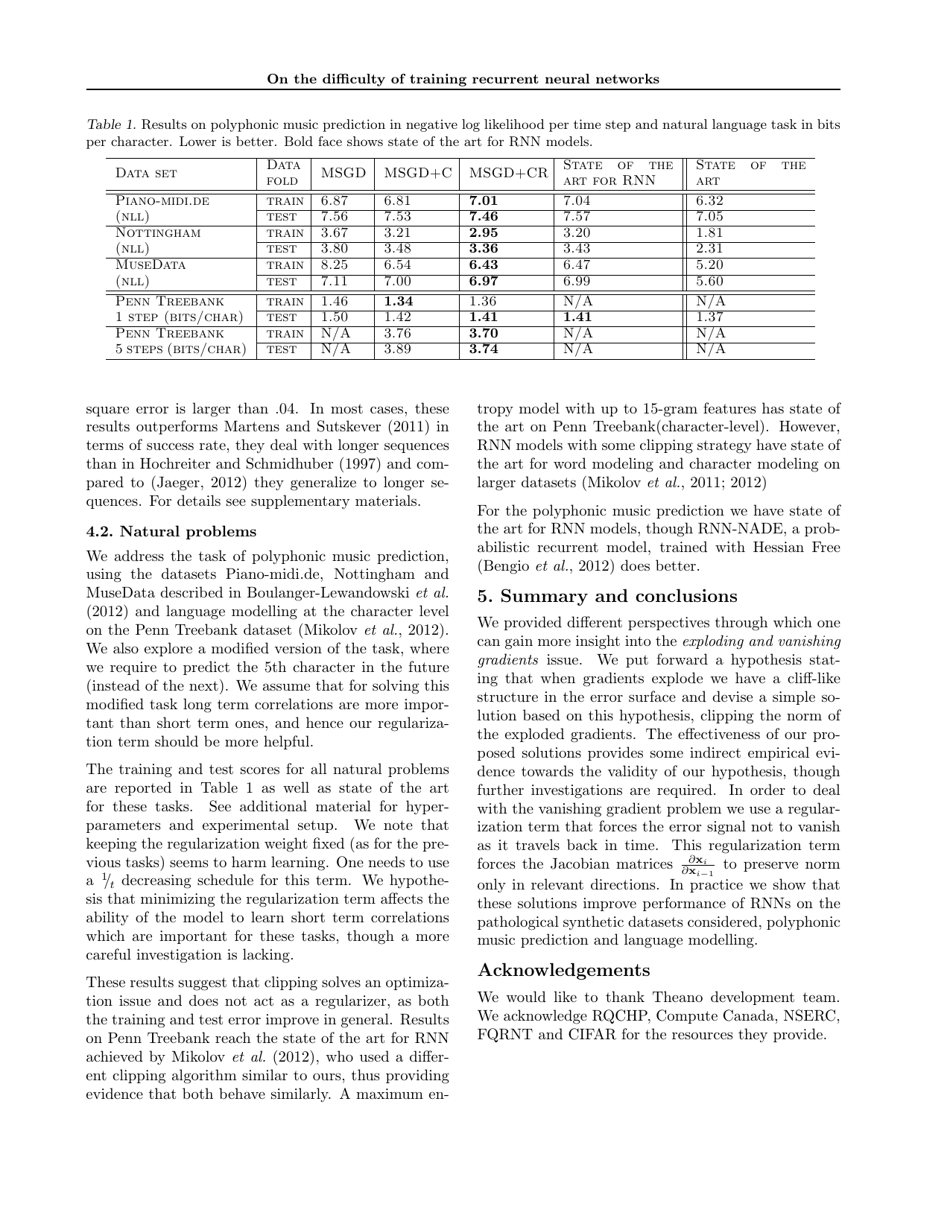

Q: What are some of the challenges in training recurrent neural networks?

A: Vanishing/exploding gradients, long-term dependencies

Q: What are some potential solutions to training recurrent neural networks?

A: Gradient clipping, using gating mechanisms, LSTM/GRU architectures