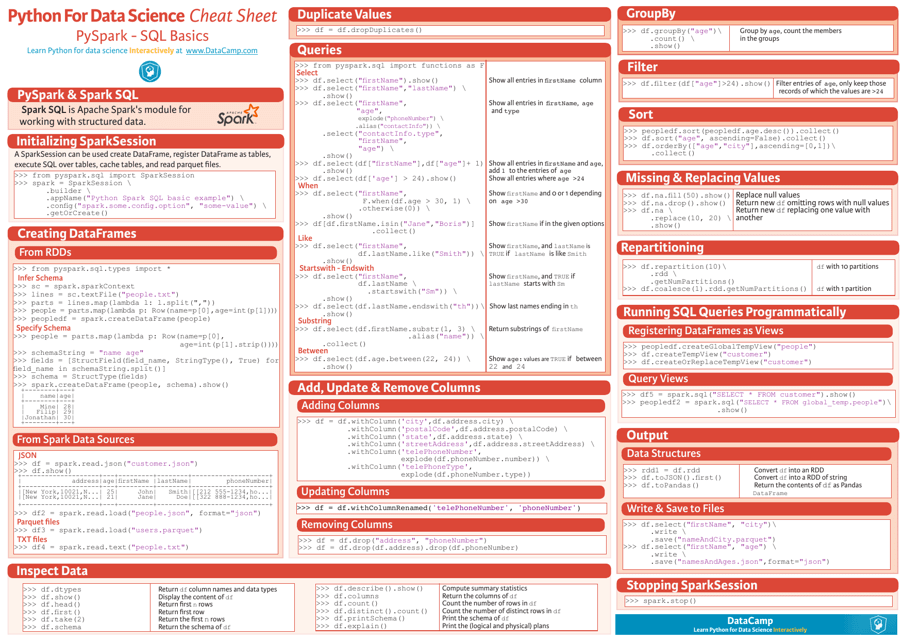

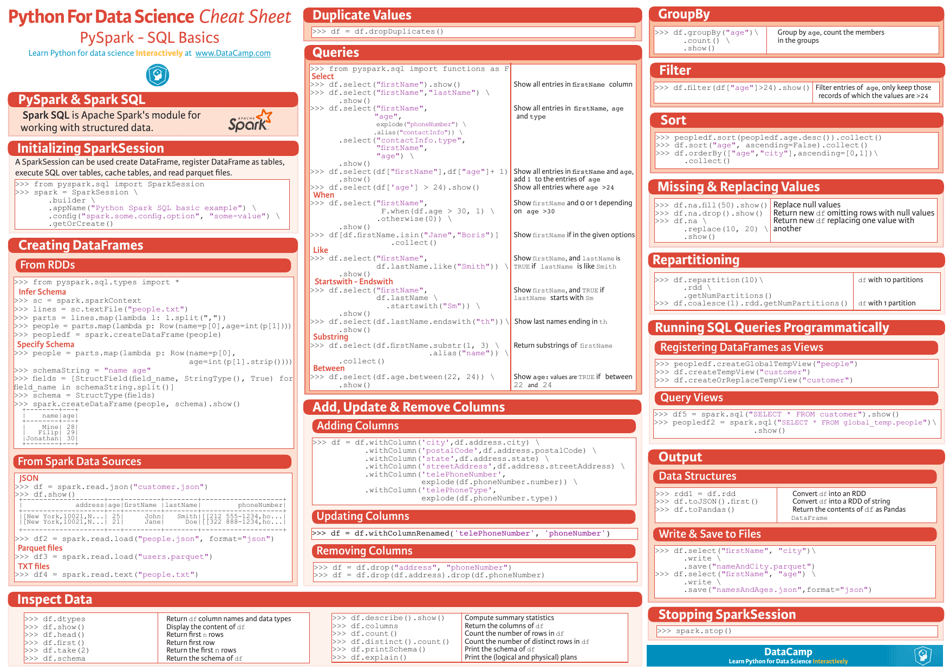

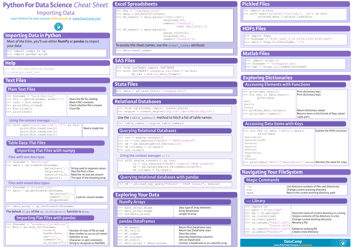

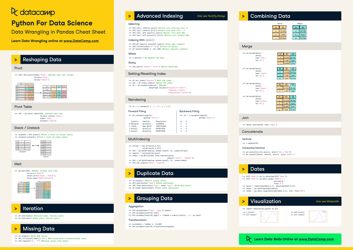

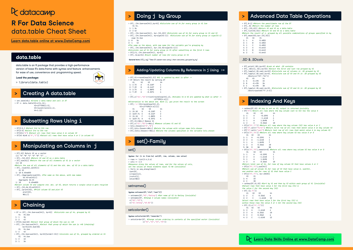

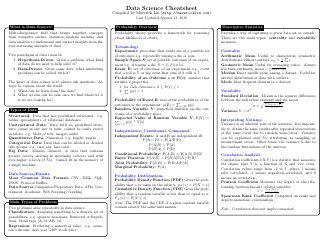

Python for Data Science Cheat Sheet - Pyspark Sql Basics

The Python for Data Science Cheat Sheet - PySpark SQL Basics is a resource that provides a quick reference guide for using PySpark SQL. It covers the basic syntax and functionalities of the PySpark SQL library, which is used for data manipulation and analysis in the context of data science.

FAQ

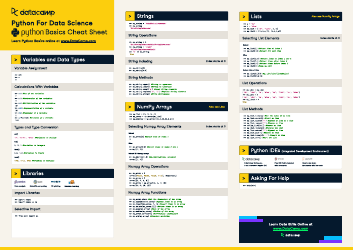

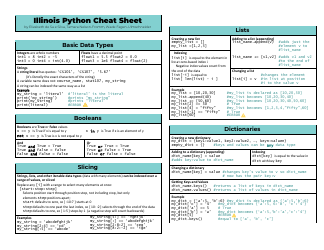

Q: What is Python for Data Science?

A: Python for Data Science is a set of libraries and tools that enable data scientists to analyze and manipulate data using Python programming language.

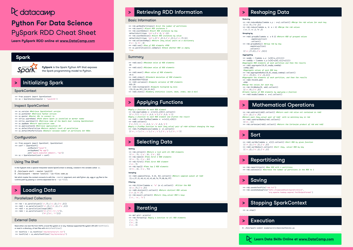

Q: What is Pyspark?

A: Pyspark is the Python API for Apache Spark, a fast and general-purpose cluster computing system.

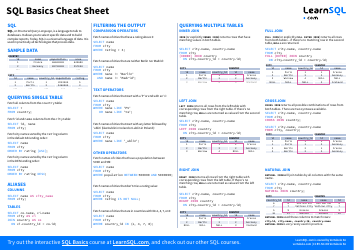

Q: What is SQL?

A: SQL stands for Structured Query Language and is a language used for managing and manipulating relational databases.

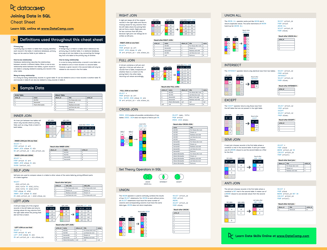

Q: What are the basics of Pyspark SQL?

A: The basics of Pyspark SQL include creating DataFrames, performing data operations using SQL queries, and writing SQL expressions to filter and manipulate data.

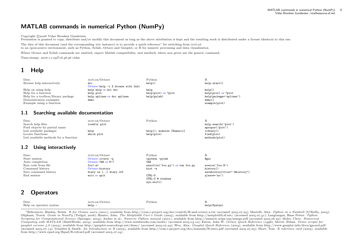

Q: How do you create a DataFrame in Pyspark?

A: You can create a DataFrame in Pyspark by reading data from various sources like CSV, JSON, or Parquet files, or by converting an existing RDD (Resilient Distributed Dataset) into a DataFrame.

Q: How do you perform data operations using SQL queries in Pyspark?

A: You can perform data operations using SQL queries in Pyspark by registering a DataFrame as a temporary table or view, and then running SQL queries on that table or view.

Q: What are SQL expressions in Pyspark?

A: SQL expressions in Pyspark are used to filter and manipulate data. They are written using SQL functions and can be used in DataFrame operations like selection, filtering, and aggregation.

Q: How can you filter data using SQL expressions in Pyspark?

A: You can filter data using SQL expressions in Pyspark by using the where() or filter() functions with the SQL expression as the condition.

Q: How can you aggregate data using SQL expressions in Pyspark?

A: You can aggregate data using SQL expressions in Pyspark by using the groupBy() and agg() functions with the SQL expressions as the grouping and aggregation criteria, respectively.

Q: What are some other useful functions in Pyspark SQL?

A: Some other useful functions in Pyspark SQL include select(), join(), orderBy(), distinct(), and union(), among others.